Author

Lucas Steinmann

Lucas is the CTO of preML and responsible for the technical devlopment inside preML. I'm enthusastic about anything related to computer vision.

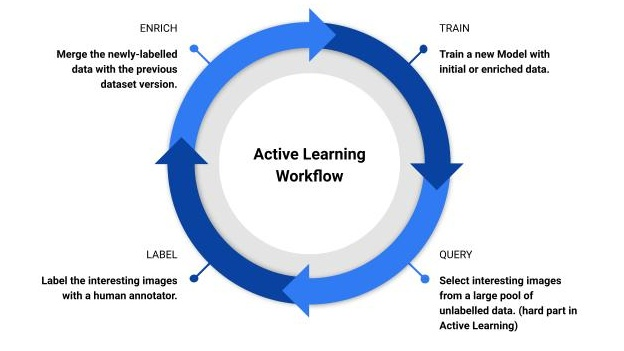

Active Learning is primarily about reducing the amount of data required to train an AI model. To do this, an AI model is used to specifically select the data that is particularly relevant and instructive for training. “Interesting” in this context means that the selected data enables the model to make significant progress in learning. Typically, these are previously unseen or particularly challenging scenarios – such as an unusual traffic situation for an autonomous driving model. By focusing on such interesting data, the amount of data to be annotated can be reduced, saving valuable time during annotation.

Figure 1: Schematic representation of the active learning workflow. The workflow is triggered by manually or randomly selected data.

Data Poisoning and Active Learning

The active learning process not only offers efficiency benefits, but can also help to detect data poisoning – i.e. the deliberate manipulation of training data. To determine which data is “interesting” for training, active learning methods calculate the uncertainty of the model at certain data points. A high uncertainty value indicates that the image contains a lot of unknown or complex information.

Typically, the average uncertainty of the model decreases over time as it gets better at recognizing and processing the data. However, if the uncertainty suddenly increases, this could indicate a change in data quality. This change does not necessarily have to be caused by data poisoning – external factors such as poorer image quality could also be the reason. Nevertheless, increased uncertainty should be regarded as a warning signal that the data may have been manipulated.

This is particularly relevant for self-learning systems or those that are trained by non-specialized users. An increase in uncertainty may indicate that the system is using data that could “confuse” the model. In this case, the training could be interrupted in order to carry out more detailed investigations. As the state of the model is saved after each iteration, a previous, safe model state can be restored in an emergency. This also allows the exact time and source of the malicious data to be determined.

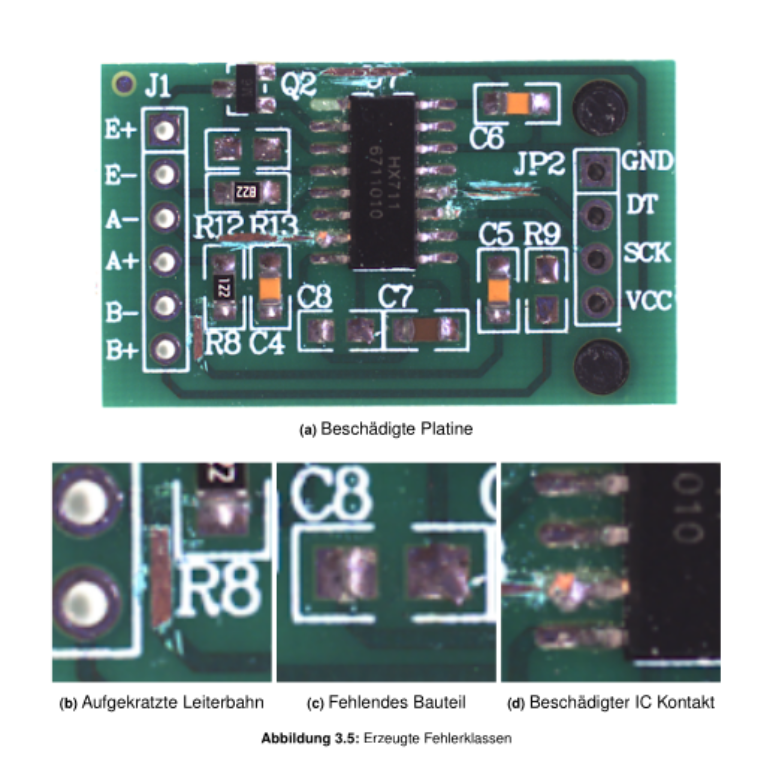

Use case: Defect detection on circuit boards

An interesting example of the use of active learning is defect detection on circuit boards. At preML, a special camera system was used to capture images of circuit boards on which defects were intentionally created. To calculate the uncertainty, model ensembles were used – i.e. several models with slightly different training data were combined. The consensus score measures the deviation of the individual predictions and thus quantifies the uncertainty.

Figure 2: Example data and defects of the use case of a damaged circuit board.

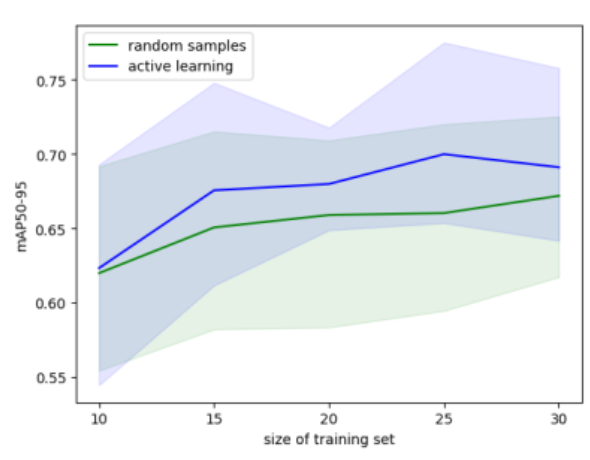

The images with the highest uncertainty values were prioritized and used for training. This allowed the required amount of data to be reduced by up to 50 % without compromising the quality of the model.

Figure 3: Result of the study: With only 15 images in the active learning workflow, a better performance was achieved than with regular training.

Conclusion

Active Learning can significantly reduce data requirements, even if only small data sets are available. It is a particularly valuable tool in areas such as automatic quality control. At preML, Active Learning is used not only to increase efficiency, but also to detect potentially erroneous or manipulated data. This makes Active Learning an attractive method for companies that want to make the most of their training data and at the same time be protected against data poisoning.

Autor

Lucas Steinmann

Lucas is the CTO of preML and responsible for the technical devlopment inside preML. I'm enthusastic about anything related to computer vision.