Author

David Fehrenbach

David is Managing Director of preML and writes about technology and business-related topics in computer vision and machine learning.

A major advantage of anomaly detection models is that they are trained exclusively with images that represent the ideal appearance of an object. This means that only images of defect-free objects are required. Any deviations that occur later that are not contained in this training data are automatically identified as anomalies – and this is precisely what makes this method so efficient and uncomplicated. Conversely, however, it is also important when creating a new data set to ensure that it represents all permitted variations. Find out how to do this here!

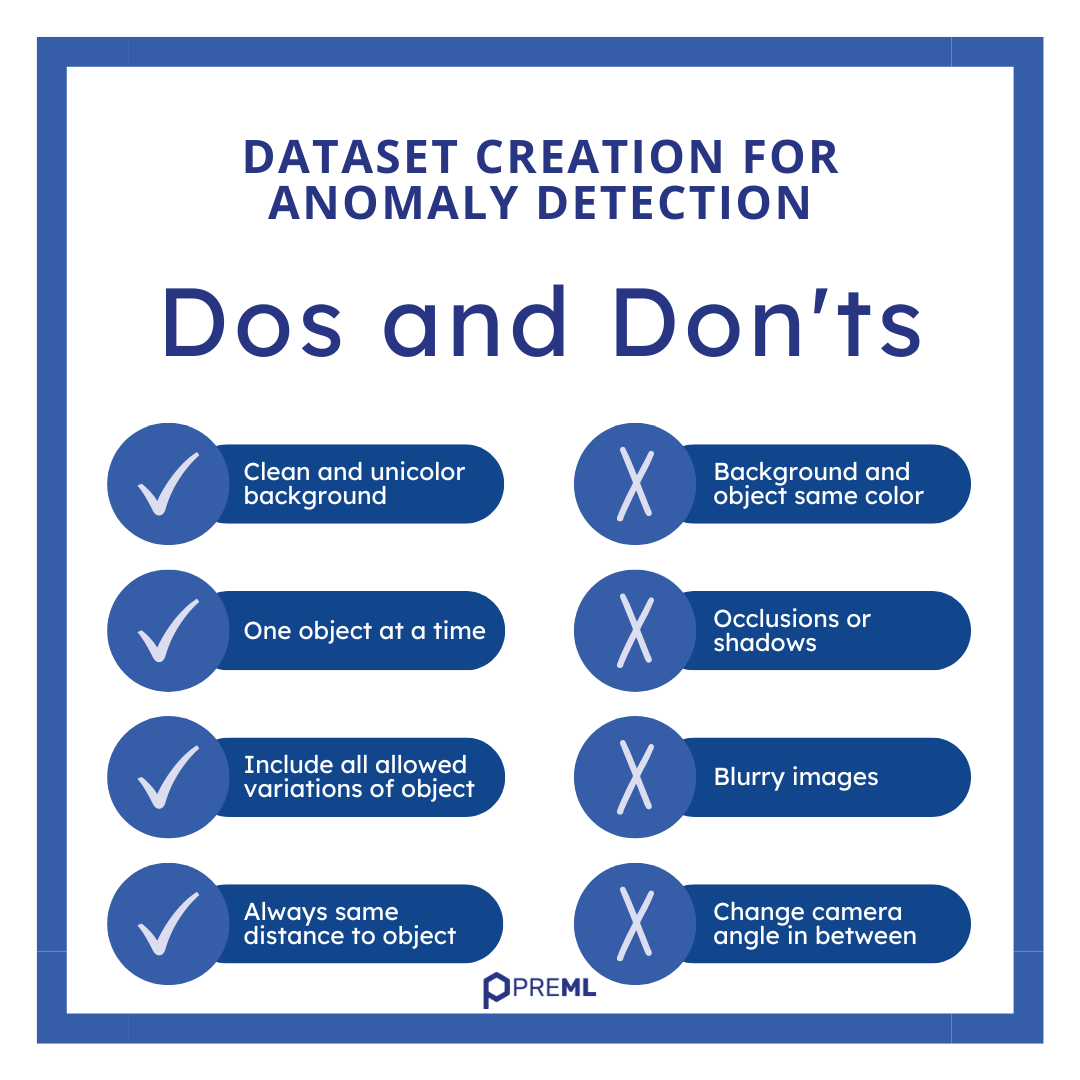

Figure 1: Our Cheat Sheet – Dos and Don’ts when creating a dataset for Anomaly Detection (c) preML GmbH

The quality of the data set is crucial – What’s important:

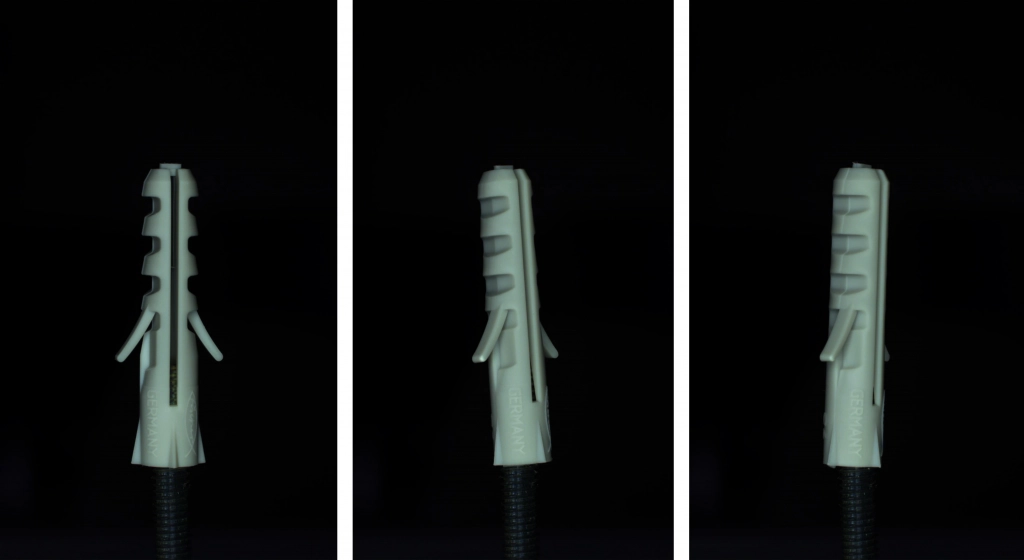

Ideally, the training data should be recorded under the same conditions (e.g. viewing angle, lighting, positioning, etc.) as the later test images. If possible, the conditions should not change. If this cannot be avoided, the training data should contain as many different conditions as possible.

As anomaly detection models do not differentiate between the object and the background, the background must also always have the same conditions if possible. A single-color background without dirt also makes training easier and reduces the susceptibility to errors.

If the object can be rotated in different ways, every permitted rotation should also occur.

Figure 2: “Normal” training data for an anomaly model. Clear contrast to a uniform background, all allowed rotations and a uniform lighting. (c) preML GmbH

Optimum number of images and iterative model improvement for precise anomaly detection

The number of ‘normal’ images required for training depends on how many variations are permitted. For industrially manufactured components that always look the same and are in the same position on the conveyor belt, very few images (7-10) may be sufficient to achieve good initial results. However, if there are inevitable variations, such as apples, which can come in different shapes, colors and rotations, more images are required (30-40).

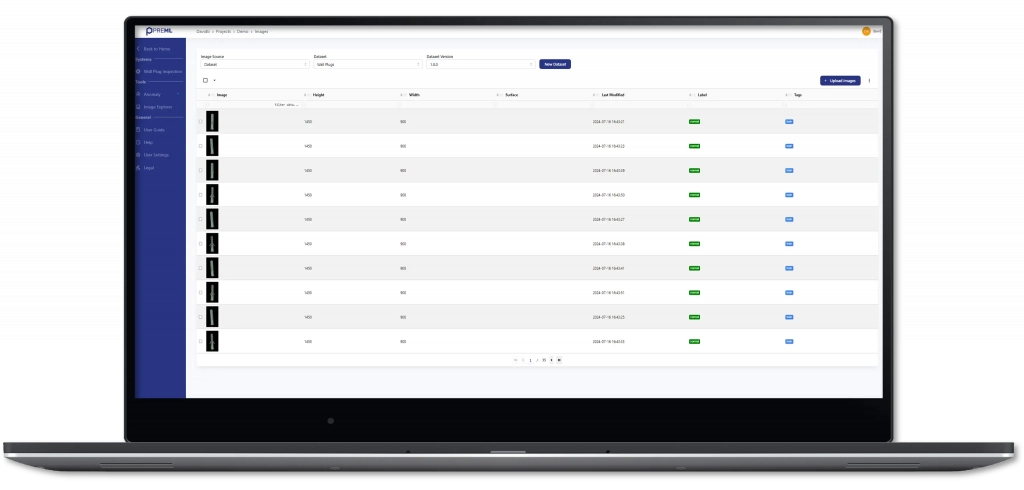

With preML’s iterative approach, a model can initially be trained with just a few images. If a live system is used, such as in the webcam tool of the demo version, incorrectly classified images can then simply be added to the data set in order to retrain and thus improve the model with just a few clicks. This process can be repeated as often as required. It is therefore advisable to start with just a few images and then continuously improve the model.

Figure 3: Data sets can be managed in the platform’s Image Explorer and the model can be easily retrained with the corresponding images in the event of incorrect decisions. (c) preML GmbH

Automatic threshold determination with anomaly images

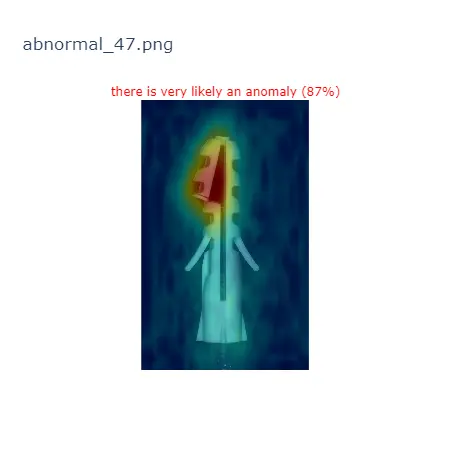

The threshold value determines the value at which a deviation is classified as an anomaly. This allows you to set how sensitively the model reacts to deviations. If the threshold value is increased, fewer areas are regarded as anomalies and vice versa. The threshold value can be adjusted manually when testing with fault patterns. With the preML software, it is also possible to automatically determine the best possible threshold value.

However, this also requires test images, especially images with anomalies. The software then optimizes the threshold value so that as many test images as possible are classified correctly.

Choosing the correct model

In most cases, PatchCore will be the right model for the application, as it usually delivers better results. If the position of the object is important, i.e. if a displacement is to be recognized as an error, the PaDiM model should be selected.

And now have fun trying it out!

Figure 4: Output of an anomaly model for the detection of defects in wall anchors as a heat map (c) preML GmbH

Cheers!

David

Feel free to contact us anytimes at contact@preml.io

Autor

David Fehrenbach

David is Managing Director of preML and writes about technology and business-related topics in computer vision and machine learning.